Je suis chercheur associé au Centre Internet et Société du CNRS et depuis 2009 membre de La Quadrature du Net, une association dédiée à la défense des droits humains dans le contexte d’informatisation.

Mes travaux en recherche-action s’inscrivent au croisement de l’histoire et de la théorie politiques, du droit ou encore de l’étude des médias et des techniques. Elles portent sur l’histoire politique d’Internet et de l’informatique, les pratiques de pouvoir comme la censure ou la surveillance des communications, la gouvernementalité algorithmique de l’espace public et plus généralement sur la transformation numérique de l’État et du champ de la sécurité.

J’ai notamment travaillé au Berkman Klein Center for Internet & Society de l’université d’Harvard, au Centre de recherches internationales de Sciences Po, à l’Institut des Sciences de la Communication du CNRS. Fin 2021, j’ai été chercheur invité au WZB Berlin Social Science Center.

Publications récentes

Contre-histoire d’Internet

À travers une histoire croisée de l’État et des luttes politiques associées aux moyens de communication, ce livre explique pourquoi le projet émancipateur associé à l’Internet a été tenu en échec et comment les nouvelles technologies servent à un contrôle social toujours plus poussé.

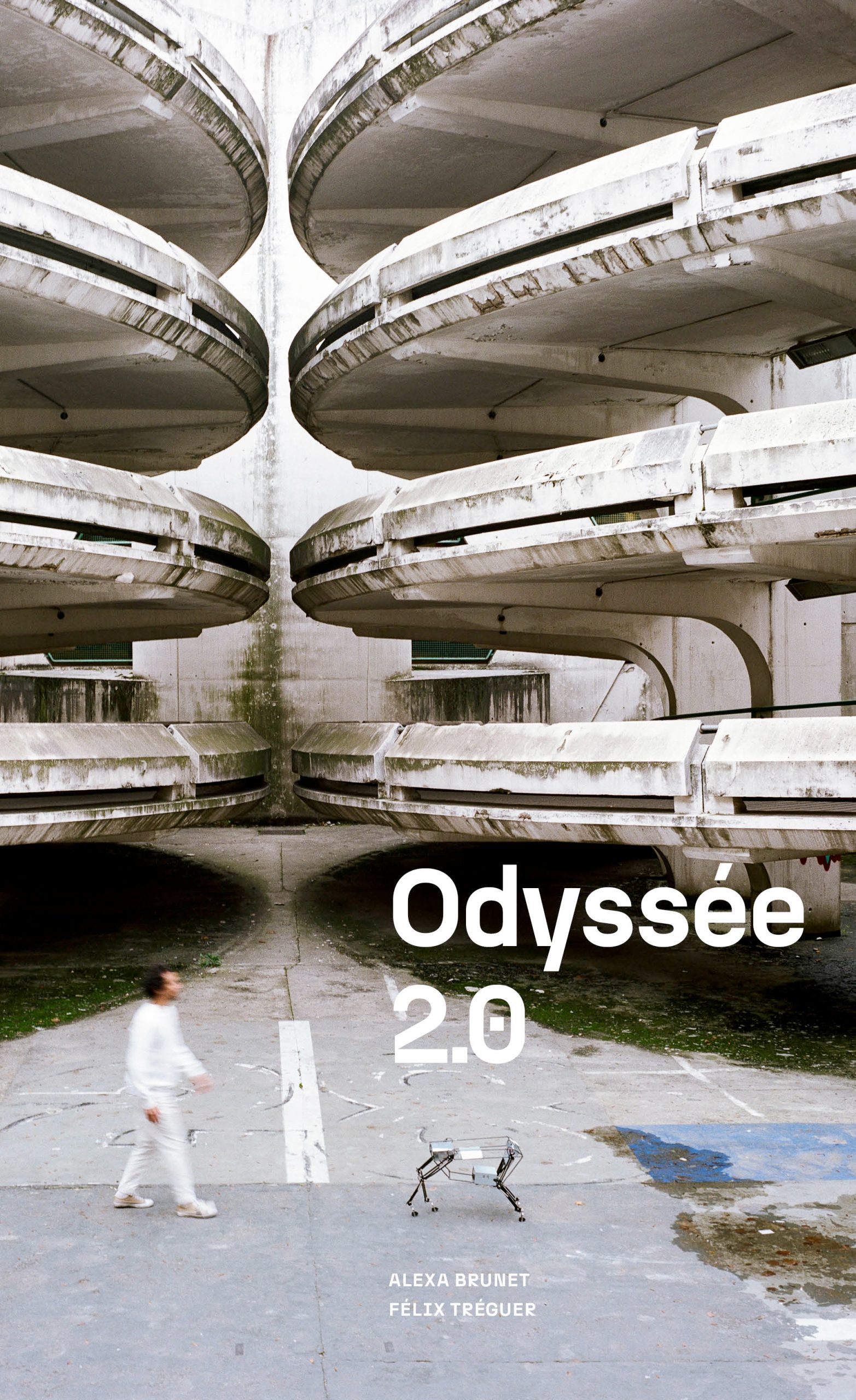

Odyssée 2.0

Odyssée 2.0 est un parcours photographique et littéraire librement inspiré du mythe d’Homère qui suit les pérégrinations d’Ulysse au sein de la Technopolis, une « Smart City » fictive et dystopique, pour inviter à une réflexion critique sur la technologisation croissante du contrôle social.